Introduction and Applications of AI Infrastructure

-

-

InfoDetail_Editor: Tony

-

3752

- Wx Share

-

-

Get AI up and running faster in any environment with AI-BOX: How to accelerate the entire lifecycle of AI/ML models and applications through integrated tools, repeatable workflows, flexible deployment options, and a trusted partner ecosystem.

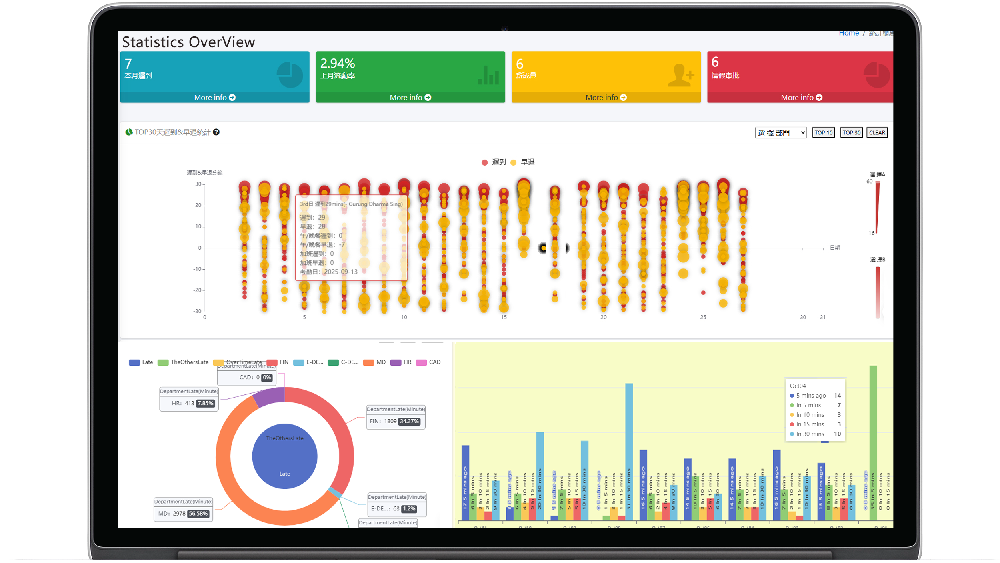

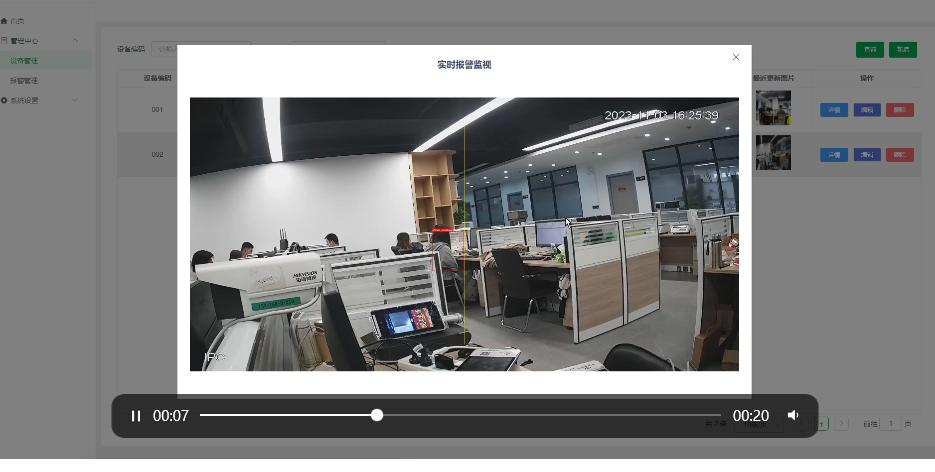

Time & Payroll Suite

Attendance, Payroll and Access Control Solution for Cost Reduction and Productivity Gains Details

All‑in‑one automated timekeeping and payroll system with robust, secure processes to guarantee payroll integrity. Includes leave management, shift rostering, multi‑site and chain support, multi‑ledger accounting, and comprehensive modules.

What is AI Infrastructure?

As artificial intelligence (AI) becomes increasingly integrated into our daily lives, establishing a structure that enables efficient and effective workflows is crucial. This is where artificial intelligence infrastructure (AI infrastructure) comes in.

A well-designed infrastructure helps data scientists and developers access data, deploy machine learning algorithms, and manage the computing resources of hardware.

AI infrastructure combines artificial intelligence and machine learning (AI/ML) technologies to develop and deploy reliable and scalable data solutions. This technology is what makes machine learning possible, allowing machines to think like humans.

Machine learning is a technique that trains computers to find patterns, make predictions, and learn from experience without explicit programming. It can be used for generative AI, implemented through deep learning, a machine learning technique used to analyze and interpret large amounts of data.

AI Infrastructure Technology Stack

A technology stack is a set of technologies, frameworks, and tools used to build and deploy software applications. We can imagine these technologies being "stacked" together layer by layer to build the entire application. The AI infrastructure technology stack can develop and deploy applications faster through three basic layers.

The Application Layer allows humans and computers to collaborate with basic workflow tools, including end-to-end applications using specific models or non-specific applications for end users. End-user applications typically use open-source AI frameworks to create customizable models that can be tailored to specific business needs.

The Model Layer contains key checkpoints that support the various capabilities of AI products. This layer requires a managed solution for deployment and includes three models:

General AI: Artificial intelligence that replicates human thinking and decision-making processes. Examples include AI applications like OpenAI's ChatGPT and Ultralytics.

Specific AI: Artificial intelligence trained on very specific and relevant data to achieve higher precision. Examples include tasks like generating advertising copy and lyrics.

Hyperlocal AI: This enables the highest accuracy and relevance of artificial intelligence, aiming to become an expert in the field. Examples include writing scientific papers or creating interior design models.

The infrastructure layer consists of the hardware and software components needed to build and train AI models. Components such as GPUs (hardware) and dedicated processors (software) for optimization and deployment are part of this layer. Cloud computing services are also part of the infrastructure layer.

Learn more about Ultralytics AI

What are the components of AI infrastructure?

Now that we've introduced the three layers involved in AI infrastructure, let's look at some of the components needed to build, deploy, and maintain AI models.

Data Storage

Data storage collects and retains various digital information, namely applications, network protocols, documents, media, address books, and user preferences, all existing in bits and bytes. A robust data storage and management system is crucial for storing, organizing, and retrieving the amount of data needed for AI training and validation.

Data Management

Data management refers to the process of collecting, storing, and utilizing data through methods such as data management software. Data management allows you to understand what data you possess, its location, who owns it, who can see it, and how to access it. With proper control and implementation, data management workflows provide the analytical insights needed to help make better decisions.

Machine Learning Frameworks

Machine learning (ML), a subclass of artificial intelligence (AI), refers to using algorithms to identify patterns in a set of data and make predictions. Machine learning frameworks provide tools and libraries for designing, training, and validating machine learning models.

Machine Learning Operations

Machine learning operations (MLOps) are a set of workflow practices designed to streamline the production, maintenance, and monitoring of machine learning (ML) models. By drawing on DevOps and GitOps principles, MLOps aims to establish a continuously evolving process that allows machine learning models to be seamlessly integrated into the entire software development lifecycle.

Learn more about building an AI/ML environment?

Why is AI infrastructure critical?

A well-designed AI infrastructure lays the foundation for successful AI and machine learning (ML) operations. It helps drive innovation and achieve efficiency.

Advantages

AI infrastructure offers numerous benefits to AI operations and enterprises. The first benefit is scalability, providing the opportunity to scale operations up and down on demand, especially with cloud-based AI/ML solutions. The second is automation, allowing repetitive tasks to reduce errors and increase turnaround time for deliverables.

Challenges

Despite the many advantages of AI infrastructure, it does present some challenges. A key challenge is the quantity and quality of data that needs to be processed. Because AI systems rely on massive amounts of data for learning and decision-making, traditional data storage and processing methods may be insufficient to handle the scale and complexity of AI workloads. Another major challenge is the demand for real-time analytics and decision-making. This means the infrastructure must process data quickly and efficiently, requiring the integration of appropriate solutions to handle large volumes of data.

Applications

Several applications can address these challenges. With AI-BOX cloud services, you can quickly build, deploy, and scale applications. You can also improve efficiency by proactively managing and supporting consistency and security. Red Hat Edge helps you deploy closer to where your data is collected and gain actionable analytics.

Software Service

Industry information

- Views 8944

- Author :Tony

- Views 603

- Author :Tony

- Views 1661

- Author :Bruce Lee

- Views 41

- Author :Tony

- Views 15

- Author :Bruce Lee

- Views 29

- Author :Tony

Hardware & Software Support

We are deeply rooted in Hong Kong’s local services, specializing in hardware and software issues.

System Integration

Hardware and software system integration to enhance stability and reliability.

Technical Support

Professional technical team providing support.

Professional Technical Services

Focused on solving problems with cutting‑edge technologies.